Artificial Intelligence Made Me Write This

I promised I wouldn’t do it, but these are unusual times....

[7 minute read]

The former principals of The KCP Group have formed Sapient Capital!

This new Substack is titled “What’s Important?”

Sapient’s motto is “we know what’s important.” That’s a tribute to my favourite definition of wisdom as “knowing what information is important.”

Twice a month I am going to explore topics that I think are either immediately important or permanently important. This week’s focus might tick both boxes.

I try not to write about things that are “news”, especially on topics where I am nothing even close to an expert.

But this unusual moment in time requires an exception.

The hype and concern around Artificial Intelligence is currently unlike anything I’ve ever seen. We’re at the point where not writing about it would be wierder.

One of my favourite Onion headlines is from 2008: “Recession plagued nation demands new bubble to invest in.”

Skeptics say the crypto and Web3 busts have simply given way to the next shiny thing for VCs. I was sympathetic to that view until I started reading more and talking to people I trust in the field. This might be one of those really rare situations when everybody is talking about something but it’s still under-hyped.

For example, the normally rather measured Ben Thompson of the tech blog Stratechery described his interaction with Bing Chat as “the most surprising and mind-blowing computer experience of my life.” Disturbing and enthralling interactions with A.I. chat have been literally front-page news for several weeks now.

UBS claims that ChatGPT has experienced the steepest adoption of any consumer technology in history. It hit 1m users in 5 days and 100m within 2 months. And now GPT4 was just released this last week.

Here at Sapient Capital we always insist that experts tell us first what’s happening right now, then their opinion, then their forecast. So here are some aggregated views in each category. I end with a characteristically bonkers thought-experiment.

What’s happening right now?

- Our goal is to “see the present clearly.” The current A.I. landscape is moving so fast even this is very difficult to do. My default is generally caution. But Wharton professor Ethan Mollick has argued that two early papers have already found:

“The effects of generative AI on knowledge work are completely unprecedented in modern history. Separate studies of both writers and programmers find 50% increases in productivity with AI, and higher performance and satisfaction…

….Unlike almost every other technological revolution, this isn't a "if technology keeps developing like this it will be amazing" situation. These studies use the flawed AI systems available right now, today. Even if AI development stopped, it would have profound effects on work.”

GPT4 is already passing a huge number of professional exams. Including scoring in the top 10% of the Bar Exam.

Text and Image A.I. platforms are flooding the market with user-created content. This is not only going to create an information glut, but we’re also seeing a proliferation of remarkably convincing audio and video fakes (it’s going to be a wild presidential election season).

This is increasing the value of human curation and synthesis, but potentially lowering the value of artistic output (for now).

Homework appears to be the first casualty, as the output appears to be good enough to fool most plagiarism detectors. This is despite the fact that chat appears to have a propensity to offer inaccurate answers.

What’s the near-term outlook?

- One common forecast is that chat disrupts search. Essentially the future vision is that you ask the chat a question and it synthesizes a “complete” answer rather than giving you links to visit. Twitter founder Ev Williams once said the fastest way to build a billion dollar company was to identify a basic human desire and take out steps. A.I. chat seems to fit that model!

- We seem very, very close to an audio-first virtual assistant akin to “Samantha” from the movie Her. This was Ben Thompson’s first impression. “This technology does not feel like a better search. It feels like something entirely new — the movie Her manifested in chat form — and I’m not sure if we are ready for it.” Whether this is positive or negative is a subjective value call.

- Moravec’s paradox claims that AI was always more likely to disrupt white collar than blue collar work. Training a robot to write code is infinitesimally less complex than giving it the sensorimotor skills required to wait tables. The combination of essential workers, work from home and now mainstream AI seems to be accelerating this evolution.

- Noah Smith recently conducted an incredibly rich interview with one of the most thoughtful long-term technology commentators, Kevin Kelly. The whole interview is really worth reading for a lot of different reasons, as well as for offering a generally optimistic take on AI.

Despite the relentless hype, I think AI overall is underhyped. The long-term effects of AI will affect our society to a greater degree than electricity and fire, but its full effects will take centuries to play out….

… The relationship AIs will have with us will tend towards being partners, assistants, and pets, rather than gods. This first round of primitive AI agents like ChatGPT and Dalle are best thought of as universal interns. It appears that the millions of people using them for the first time this year are using these AIs to do the kinds of things they would do if they had a personal intern: write a rough draft, suggest code, summarize the research, review the talk, brainstorm ideas, make a mood board, suggest a headline, and so on. As interns, their work has to be checked and reviewed [emphasis added].

- Tyler Cowen has recently argued that A.I. is one of the emerging technologies that might finally end the “Great Stagnation.” One of his central theses is that progress across a wide variety of areas largely plateaued from 1973 onwards.

“I think within two years or so, AI will write about half of all computer code, and it will write the boring half. So programmers will be freed up to be more creative, or to try new areas where the grunt work is more or less done for them. That will be significant. Of course, the code has to be edited and checked, there will be errors. But it writes so much of it for you so quickly. And I think that will lead indirectly to some fantastic breakthroughs and creativity of programming…

…And then, individuals will have individualized tutors in virtually every area of human knowledge. That is something that’s not thirty years off—I think it’s within one year, when GPT-4 is released, or when Anthropic is released. So imagine having this universal tutor. It’s not perfect, but much better than what you had before. We’ll see how these things are priced and financed. But that, to me, is a very significant breakthrough.”

What are the highly speculative trends?

- A.I. Heaven: We free the masses from the drudgery of intellectual busywork and give us all the freedom to be more human. There is an explosion of artistic flowering and we all go outside and touch grass.

- A.I. Hell: There is obviously a lot of concern (from credible people!) that we are toying with the creation of Artificial General Intelligence (AGI). The average person working in “A.I. alignment” thinks there’s about a 30% chance of existential risk. The second-most intelligent entities on earth don’t tend to fare very well (calamari).

- For those that note that the A.I. applications we’re seeing currently are mostly constrained to chat and text, I would ask how a chat that’s 1,000x more intelligent than us could influence our behaviour. Alex Garland’s excellent movie Ex Machina might be worth re-watching.

- The most thoughtful arguments I’ve read on the alarmed end of the spectrum are from Neuroscientist Erik Hoel. He has summarised and responded to all the dismissals of his concerns here. I recommend that you give his piece a read.

“And what is more dangerous? The atom bomb, or a single entity significantly more intelligent than any human? The answer is the entity significantly more intelligent than any human, since intelligence is the most dangerous quality in existence. It’s the thing that makes atom bombs. Atom bombs are just like this inconsequential downstream effect of intelligence. If you think this is sci-fi, I remind you that superintelligences are what the leaders of these companies are expecting to happen..

…The only acceptable current argument against AI safety is an unshakeable certainty that we are nowhere near getting surpassed. But even if that’s true, it just pushes the problem down the road. In other words, AI safety as a global political, social, and technological issue is inevitable.”

As of this week, he thinks AGI is already here.

And finally….

….A borderline insane thought experiment.

As you can tell, I don’t know much about A.I. specifically. But this unexpected acceleration fits several of the archetypal themes I’ve studied for many years. I’ve also been thinking and writing about a tipping point in culture for several years now.

This sudden shift in A.I. has me wondering more than ever before if it’s here. Now.

Everything evolves through cycles of competition and cooperation, but at ever greater scales. For humanity, that means we’ve gone from isolated tribal conflicts to world wars. Conflict has also produced correspondingly higher levels of technological progress and international cooperation; nukes and the United Nations.

The conflict stages are frequently defined by an imbalance towards the excessively predatory side of our nature. We saw this in the rational, cold thirst for power that defined fascism and communism throughout the 20th Century. To put it in my favourite neurological terms, the left hemisphere has tried to usurp the holistic right.

The dark question I am pondering looking at the inexorable cycle of conflict is: were the two world wars our “final” wars, or is there an even larger, planetary-scale conflict ahead? If there was, it’s an interesting thought experiment that it would be against AGI. Those that worry about AGI suspect it would be infinitely rational, powerful and hostile to organic life. The Left Hemisphere summoned into existence. It ticks a lot of boxes.

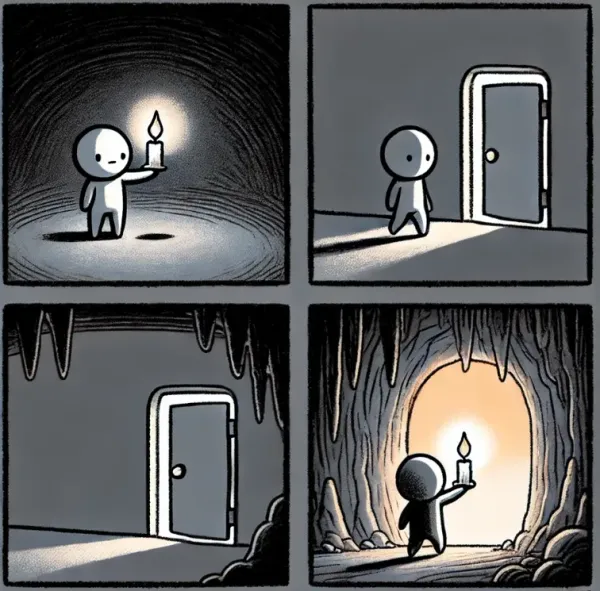

A central insight from myth is that we ignore the call to adventure until a crisis forces us to. For the last few hundred years (at least) it's consistently been this kind of disembodied, out of control rationalism threatening life.

The nature of the conflict with the left hemisphere is that something BIG must be sacrificed in order to avoid us being devoured. If wonder if we might need to sacrifice the exact same safety and predictability that technology has given us. For example, what if we were forced, through desperate crisis, to turn off the internet? A sacrifice of almost incomprehensible cost.

..Probably not, but A.I. might hopefully force us to use our suddenly-superhuman tools more responsibly.

We have the technological power of gods, but without the cooperation or wisdom to understand our corresponding responsibility. A species-level threat would force us to grow up overnight.

“Every transformation demands as its precondition "the ending of a world"-the collapse of an old philosophy of life.”- Carl Jung

Thanks for reading, subscribe below for the twice-monthly “What’s Important” from Sapient Capital.

Subscribe for free to receive new posts and support my work.